Greetings, Dear Readers! I have explained briefly how Advanced Driver Assistant System (ADAS) works and the function of active vs passive ADAS features in my previous article which appeared in the November 2024 edition of AutoMark. The next step that I want to take is to explain what the technology behind ADAS is all about.Advanced Driver Assistance Systems use various sensors to make the vehicle safer and to provide many automated driving functionalities. The sensors are the fundamentals on which the car scans its environment and makes proper decisions.

A human-operated car works because we have stereoscopic vision, and are able to deduce relative distance and velocity in our brains. Even with one eye closed, we can deduce distance and size using monocular vision fairly accurately because our brains are trained by real-world experience.

Our eyes and brains also allow us to read and react to signs and follow maps, or simply remember which way to go because we know the area. We know how to check mirrors quickly so that we can see in more than one direction without turning our heads around.

Our brains know the rules of driving. Our ears can hear sirens, honking, and other sounds, and our brains know how to react to these sounds in context. Just driving a short distance to buy some milk and bread, a human driver makes thousands of decisions, and makes hundreds of mechanical adjustments, large and small, using the vehicle’s hand and foot controls.

Replacing the optical and aural sensors connected to a brain made up of approximately 86 billion neurons is no easy feat. It requires a suite of sensors and very advanced processing that is fast, accurate, and precise. Truly self-driving cars are being developed to learn from their experience just as human beings do, and to integrate that knowledge into their behavior.

Key types of ADAS sensors in use today

Following is the list of main types of ADAS sensors in use today:

- Video cameras

- SONAR

- RADAR

- LiDAR

- GPS/GNSS

We will look closer look at each of these in this article.

A vehicle needs sensors to replace or augment the senses of the human driver. Our eyes are the main sensor we use when driving, but of course, the stereoscopic images that they provide need to be processed in our brains to deduce relative distance and vectors in a three-dimensional space.

We also use our ears to detect sirens, honking sounds from other vehicles, railroad crossing warning bells, and more. All of this incoming sensory data is processed by our brains and integrated with our knowledge of the rules of driving so that we can operate the vehicle correctly and react to the unexpected.

ADAS systems need to do the same. Cars are increasingly being outfitted with RADAR, SONAR, and LiDAR sensors, as well as getting absolute position data from GPS sensors and inertial data from IMU sensors. The processing computers that take in all this information and create outputs to assist the driver, or take direct action, are steadily increasing in power and speed in order to handle the complex tasks involved in driving.

Video Cameras (Optical Image Sensor)

The first use of cameras in automobiles was the backup camera,“reverse camera.” Combined with a flat video screen on the dashboard, this camera allows drivers to more safely back up into a parking space, or when negotiating any maneuver that involves driving in reverse. But the primary initial motivation was to improve pedestrian safety. According to the Department of Transportation, more than 200 people are killed and at least 12,000 more are injured each year because a car backed into them. These victims are mostly children and older people with limited mobility.

Once only installed in high-end cars, backup cameras have been required in all vehicles sold in the USA since May 2018. Canada adopted a similar requirement. The European Commission is moving toward requiring a reversing camera or monitoring system in all cars, vans, trucks, and buses in Europe by 2022. The Transport Ministry in Japan is requiring backup sensors (a camera, ultrasonic sensors, or both) on all automobiles sold in Japan by May 2022.

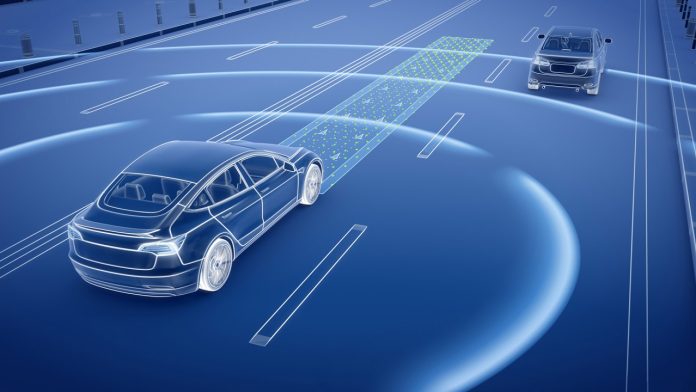

But in today’s ADAS vehicles there can be multiple cameras, pointing in different directions. And they’re not just for backup safety anymore: their outputs are used to build a three-dimensional model of the vehicle’s surroundings within the ADAS computer system. Cameras are used for traffic sign recognition, reading lines and other markings on the road, detecting pedestrians, obstructions, and much more. They can also be used for security purposes, rain detection, and other convenience features as well.

The output of most of these cameras is not visible to the driver. Rather, it feeds into the ADAS computer system. These systems are programmed to process the stream of images and identify stop signs, for example, and to understand that another vehicle is signaling a right turn and that a traffic light has just turned yellow, and more. They are heavily used to detect road markings, which is critical for lane-keeping assistance. This is a huge amount of data and processing power, and it’s only increasing in the march toward self-driving cars.

SONAR (Sound Navigation & Ranging)

SONAR (Sound Navigation and Ranging), “ultrasound” sensors generate high-frequency audio on the order of 48 kHz, more than twice as high as the typical human hearing range. (Interestingly, many dogs can hear these sensors, but they don’t seem bothered by them.) When instructed by the car’s ECU, these sensors emit an ultrasonic burst, and then they “listen” for the returning reflections from nearby objects.

By measuring the reflections of this audio, these sensors can detect objects that are close to the vehicle. Ultrasound sensors are heavily used in backup detection and self-parking sensors in cars, trucks, and buses. They are located on the front, back, and corners of vehicles.

Since they operate by moving the air and then detecting acoustic reflections, they are ideal for low-speed applications, when the air around the vehicle is typically not moving very fast. Because they are acoustic in nature, ultrasound performance can be degraded by exposure to an extremely noisy environment.

Ultrasound sensors have a limited range compared to RADAR, which is why they are not used for measurements requiring distance, such as automated cruise control or high-speed driving. But if the object is within 2.5 to 4.5 meters (8.2 to 14.76 feet) of the sensor, ultrasound is a less expensive alternative to RADAR. Ultrasound sensors are not used for navigation because their range is limited, and they cannot detect objects smaller than 3 cm (1.18 in.).

Ultrasound sensors are the round ‘’disks’’ on the back of this car (Red Circles).

Interestingly, electric car maker Tesla invented a way to project ultrasound through metal, allowing them to hide these sensors all over their cars, to maintain vehicle aesthetics. The round disks on the bumpers of the car in the picture above are ultrasound sensors.

RADAR (Radio Detection & Ranging)

RADAR (Radio Detection and Ranging) sensors are used in ADAS-equipped vehicles for detecting large objects in front of the vehicle. They often use a 76.5 GHz RADAR frequency, but other frequencies from 24 GHz to 79 GHz are also used.

Two basic methods of RADAR detection are used:

- Direct propagation

- Indirect propagation

In both cases, however, they operate by means of emitting these radio frequencies and measuring the propagation time of the returned reflections. This allows them to measure both the size and distance of an object and its relative speed.

Because RADAR signals can range 300 meters in front of the vehicle, they are particularly important during highway speed driving. Their high frequencies also mean that the detection of other vehicles and obstacles is very fast. Additionally, RADAR can “see” through bad weather and other visibility occlusions. Because their wavelengths are just a few millimeters long, they can detect objects of several cm or larger.

Application for Automotive RADAR System

RADAR is especially good at detecting metal objects, like cars, trucks, and buses. As the image above shows, they are essential for collision warning and mitigation, blind-spot detection, lane change assistance, parking assistance, adaptive cruise control (ACC), and more.

LiDAR(Light Detection & Ranging)

LiDAR (Light Detection and Ranging) systems are used to detect objects and map their distances in real-time. Essentially, LiDAR is a type of RADAR that uses one or more lasers as the energy source. It should be noted that the lasers used are the same eye-safe types used at the check-out line in grocery stores.

High-end LiDAR sensors rotate, emitting eye-safe laser beams in all directions. LiDAR employs a “time of flight” receiver that measures the reflection time.

An IMU and GPS are typically also integrated with the LiDAR so that the system can measure the time it takes for the beams to bounce back and factor in the vehicle’s displacement during the interim, to construct a high-resolution 3D model of the car’s surroundings called a “point cloud.” Billions of points are captured in real-time to create this 3D model, scanning the environment up to 300 meters (984 ft.) around the vehicle, and within a few centimeters (~1 in.) of accuracy.

LiDAR sensors can be equipped with up to 128 lasers inside. The more lasers, the higher the resolution 3D point cloud can be built

LiDAR can detect objects with much greater precision than RADAR or Ultrasound sensors, however, their performance can be degraded by interference from smoke, fog, rain, and other occlusions in the atmosphere.But, because they operate independent of ambient light (they project their own light), they are not affected by darkness, shadows, sunlight, or oncoming headlights.

LiDAR sensors are typically more expensive than RADAR because of their relative mechanical complexity. They are increasingly used in conjunction with cameras because LiDAR cannot detect colors (such as the ones on traffic lights, red brake lights, and road signs), nor can they read the text as well as cameras. Cameras can do both of those things, but they require more processing power behind them to perform these tasks.

GPS/GNSS (Global Position System / Global Navigation Satellite System)

In order to make self-driving vehicles a reality, we require a high-precision navigation system. Vehicles today are using the Global Navigation Satellite System (GNSS). GNSS is more than just the “GPS” that everyone knows about.

GPS, which stands for Global Positioning System is a constellation of more than 30 satellites circling the planet. Each satellite emits extremely accurate time and position data continuously. When a receiver gets usable signals from at least four of these satellites, it can triangulate its position. The more usable signals it gets, the more accurate the results.

But GPS is not the only global positioning system. There are multiple constellations of GNSS satellites orbiting the earth right now:

- GPS – USA

- GLONASS – Russia

- Galileo – Europe

- BeiDou – China

The best GNSS systems installed in today’s vehicles have the ability to utilize two or three of these constellations. Using multiple frequencies provides the best possible performance because it reduces errors caused by signal delays, which are sometimes caused by atmospheric interference. Also, because the satellites are always moving, tall buildings, as well as hills and other obstructions, can block a given constellation at certain times. Therefore, being able to access more than one constellation mitigates this interference.

Consumer-type (non-military) GNSS provides positional accuracy of about one meter (39 inches). This is fine for the typical navigation system in a human-operated vehicle. But for real autonomy, we need centimeter-level accuracy.

GNSS accuracy can be improved using a regional or localized augmentation system. There are both ground and space-based systems in use today that provide GNSS augmentation. Ground-based augmentation systems are known collectively as GBAS, while satellite or space-based augmentation systems are known collectively as SBAS.

A few examples of how GNSS and other ADAS sensors work together

When we drive into a covered parking garage or tunnel, the GNSS signals from the sky are completely blocked by the roof. IMU (inertial measurement unit) sensors can sense changes in acceleration in all axes, and perform a “dead reckoning” of the vehicle’s position until the satellites return. Dead reckoning accuracy drifts over time, but it is very useful for short durations when the GNSS system is “blind.”

Driving under any conditions, cameras, LiDAR, SONAR, and RADAR sensors can provide the centimeter-level positional accuracy that GNSS simply cannot (without correction from an RTK). They can also sense other vehicles, pedestrians, et al – something that GNSS is not meant to do because the satellites are not sensors – they simply report their time and position very accurately.

In cities, the buildings create a so-called “urban canyon” where GNSS signals bounce around, resulting in multipath interference (the same signal reaches the GNSS antenna at different times, confusing the processor). The IMU can dead-reckon under these conditions to provide vital position data, while the other sensors (cameras, LiDAR, RADAR, and SONAR) continue to sense the world around the vehicle on all sides.

ADAS Sensor Advantages and Disadvantages

Each of the sensors used in ADAS vehicles has strengths and weaknesses which are explained below.

- LiDAR is great for seeing in 3D and does well in the dark, but it can’t see color. LiDAR can detect very small objects, but its performance is degraded by smoke, dust, rain, etc. in the atmosphere. They require less external processing than cameras, but they are also more expensive than cameras.

- Cameras can see whether the traffic light is red, green, amber, or other colors. They’re great at “reading” signs and seeing lines and other markings. But they are less effective at seeing in the dark, or when the atmosphere is dense with fog, rain, snow, etc. They also require more processing than LiDAR.

- RADAR can see farther up the road than other range-finding sensors, which is essential for high-speed driving. They work well in the dark and in when the atmosphere is occluded by rain, dust, fog, etc. They can’t make models as precisely as cameras or LiDARs or detect very small objects as other sensors can.

- SONAR sensors are excellent at close proximity range-finding, such as parking maneuvers, but not good for distance measurements. They can be disturbed by wind noise, so they don’t work well at high vehicle speeds.

- GNSS, combined with a frequently updated map database, is essential for navigation. But raw GNSS accuracy of a meter or more is not sufficient for fully autonomous driving, and without a line of sight to the sky, they cannot navigate at all. For autonomous driving, they must be integrated with other sensors, including IMU, and be augmented with an RTK, SBAS, or GBAS system.

IMU systems provide the dead-reckoning that GNSS systems need when the line of sight to the sky is blocked or disturbed by signal multipath in the “urban canyon.”

These sensors complement each other and allow the central processor to create a three-dimensional model of the environment around the vehicle, to know where to go and how to get there, to follow the rules of driving, and react to the expected and unexpected that happens on every roadway and parking lot.

In short, we need them all, or a combination of them, to achieve ADAS and eventually, autonomous driving.

The objective of this article was to introduce the technological backbone of ADAS; my next articlewill finally cover ADAS testing, ADAS standards / safety protocols, and ADAS systems in relation to Pakistani motor vehicle rules and infrastructure.

Be sure to stay tuned!

Exclusive written for Automark Magazine, December 2024 by Muhammad Usman Iqbal, Head of Quality Department Foton Jw Auto Park (Pvt.) Limited.b